1.目的 我们在训练模型的时候,不知道自己的超参数epochs设置的是够正确,能否训练出一个足够好的模型?

2.步骤 安装

训练模型代码中保存loss值

1 2 3 4 5 6 7 from torch.utils.tensorboard import SummaryWriterwriter = SummaryWriter("logs" ) for i in range (100 ): writer.add_scalar("y=2*x" , 2 * i, i) writer.close()

启动TensorBoard

1 tensorboard --logdir=logs

–logdir :读取文件所在的文件夹

3.举例 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 import torchfrom torch.utils.tensorboard import SummaryWriterx_data = torch.Tensor([[1.0 ], [2.0 ], [3.0 ]]) y_data = torch.Tensor([[2.0 ], [4.0 ], [6.0 ]]) epochs = 1000 class LinearModel (torch.nn.Module): def __init__ (self ): super (LinearModel, self).__init__() self.linear = torch.nn.Linear(1 , 1 ) def forward (self, x ): y_pred = self.linear(x) return y_pred model = LinearModel() loss = torch.nn.MSELoss(reduction='mean' ) opmizier = torch.optim.SGD(model.parameters(), lr=0.001 ) writer = SummaryWriter("logs" ) for epoch in range (epochs): y_pred = model(x_data) l = loss(y_pred, y_data) print (l.item()) writer.add_scalar('loss' , l.item(), epoch) l.backward() opmizier.step() opmizier.zero_grad() writer.close() print ("w:" , model.linear.weight.item())print ("b:" , model.linear.bias.item())x_test = torch.tensor([4.0 ]) y_test = model(x_test) print ("y_pred:" , y_test.item())

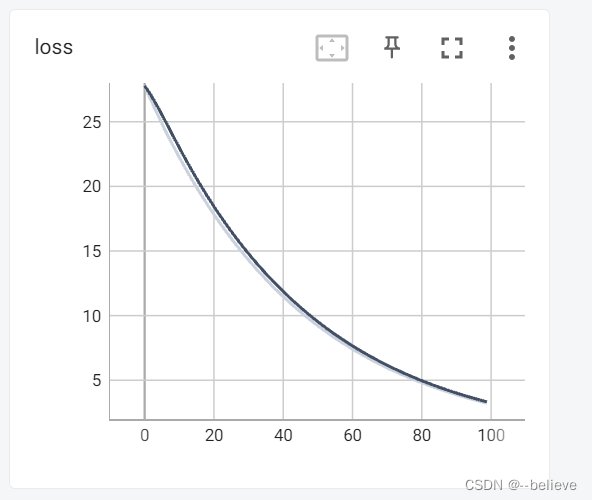

3.1 epochs设置过小

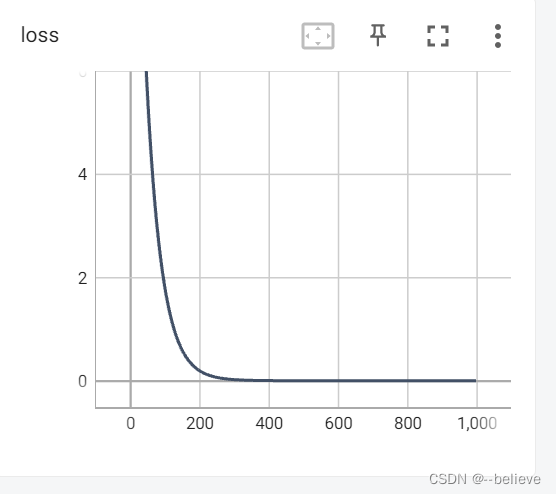

3.2 epochs设置合理